In the Last edition we learnt how a Neuron works..

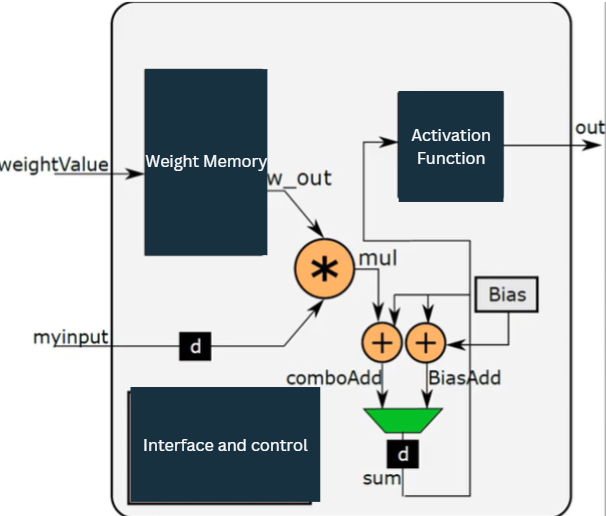

Here we will learn about Hardware Design of Neuron

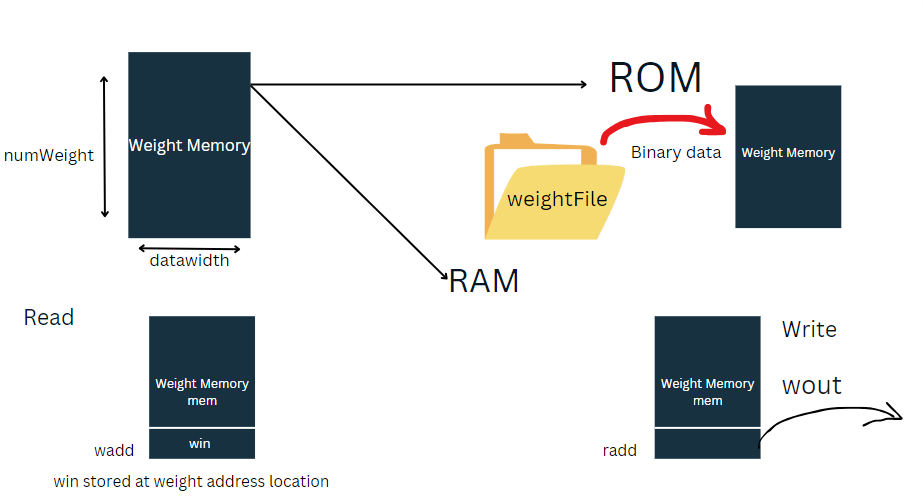

The Weight Memory

reg [dataWidth-1:0] mem [numWeight-1:0];

That is why our weight memory code will be as such

`ifdef pretrained

initial

begin

$readmemb(weightFile, mem);

end

`else

always @(posedge clk)

begin

if (wen)

begin

mem[wadd] <= win;

end

end

`endif

always @(posedge clk)

begin

if (ren)

begin

wout <= mem[radd];

end

end Let us learn how the weights are loaded into neuron, provided that neuron is not pretrained

We will weight value from all 1s, So that when valid weight and correct layer and configuration of neuron is identified, Wddr will be incremented with this value will be stored from 0 address..

always @(posedge clk)

begin

if(rst)

begin

w_addr <= {addressWidth{1'b1}};

wen <=0;

end

else if(weightValid & (config_layer_num==layerNo) & (config_neuron_num==neuronNo))

begin

w_in <= weightValue;

w_addr <= w_addr + 1;

wen <= 1;

end

else

wen <= 0;

end

Now , we will multiply myinput with wout

always @(posedge clk)

begin

mul <= $signed(myinputd) * $signed(w_out);

endWhy did we added delay to input

Whenever the input comes, it should be multiplied with corresponding weight.

Our weight memory works sequentially,it needs one clock latency so input also needs some delay..

Now , we will add product of weight memory and input and add it with previous sum.

assign comboAdd = mul + sum

So, now with this 2 possibilities arise i.e overflow and underflow..

If the msb of multiply output and sum is 0 and combo add is 1 than it is overflow

than we will add 0 to msb of sum and rest bit will be made 0.

Similarly the case for underflow is made…

But above dont work for last multiplication, because for last multiply we need to add bias to multiply output

assign BiasAdd = bias + sum

always @(posedge clk)

begin

if(rst|outvalid)

sum <= 0;

else if((r_addr == numWeight) & muxValid_f)

begin

if(!bias[2*dataWidth-1] &!sum[2*dataWidth-1] & BiasAdd[2*dataWidth-1]) //If bias and sum are positive and after adding bias to sum, if sign bit becomes 1, saturate

begin

sum[2*dataWidth-1] <= 1'b0;

sum[2*dataWidth-2:0] <= {2*dataWidth-1{1'b1}};

end

else if(bias[2*dataWidth-1] & sum[2*dataWidth-1] & !BiasAdd[2*dataWidth-1]) //If bias and sum are negative and after addition if sign bit is 0, saturate

begin

sum[2*dataWidth-1] <= 1'b1;

sum[2*dataWidth-2:0] <= {2*dataWidth-1{1'b0}};

end

else

sum <= BiasAdd;

endThis sum now goes to activation function

Activation function supports 2 functions,one is RELU and other is sigmoid

generate

if(actType == "sigmoid")

begin:siginst

//Instantiation of ROM for sigmoid

Sig_ROM #(.inWidth(sigmoidSize),.dataWidth(dataWidth)) s1(

.clk(clk),

.x(sum[2*dataWidth-1-:sigmoidSize]), //send the sum value

.out(out)

);

end

else

begin:ReLUinst

ReLU #(.dataWidth(dataWidth),.weightIntWidth(weightIntWidth)) s1 (

.clk(clk),

.x(sum),

.out(out)

);

end

endgenerateIn next editions we will learn, even more deeper this was a overview of implementation of Neuron!

Stay tuned

Leave a comment