In the Last edition we learnt how a Neuron works..

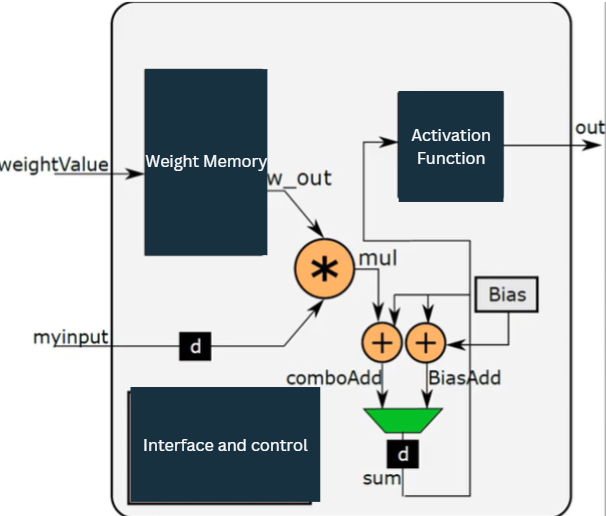

Here we will learn about Hardware Design of Neuron

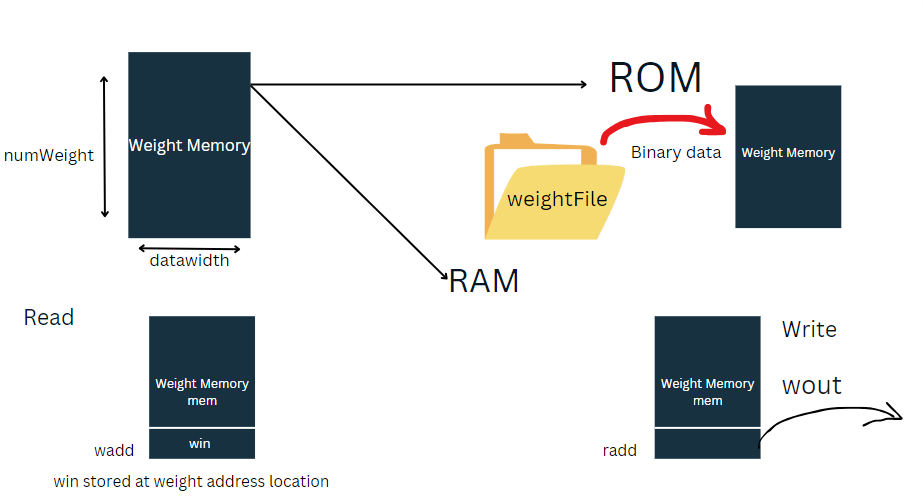

The Weight Memory

reg [dataWidth-1:0] mem [numWeight-1:0];

That is why our weight memory code will be as such

`ifdef pretrained

initial

begin

$readmemb(weightFile, mem);

end

`else

always @(posedge clk)

begin

if (wen)

begin

mem[wadd] <= win;

end

end

`endif

always @(posedge clk)

begin

if (ren)

begin

wout <= mem[radd];

end

end Let us learn how the weights are loaded into neuron, provided that neuron is not pretrained

We will weight value from all 1s, So that when valid weight and correct layer and configuration of neuron is identified, Wddr will be incremented with this value will be stored from 0 address..

always @(posedge clk)

begin

if(rst)

begin

w_addr <= {addressWidth{1'b1}};

wen <=0;

end

else if(weightValid & (config_layer_num==layerNo) & (config_neuron_num==neuronNo))

begin

w_in <= weightValue;

w_addr <= w_addr + 1;

wen <= 1;

end

else

wen <= 0;

end

Now , we will multiply myinput with wout

always @(posedge clk)

begin

mul <= $signed(myinputd) * $signed(w_out);

endWhy did we added delay to input

Whenever the input comes, it should be multiplied with corresponding weight.

Our weight memory works sequentially,it needs one clock latency so input also needs some delay..

Now , we will add product of weight memory and input and add it with previous sum.

assign comboAdd = mul + sum

So, now with this 2 possibilities arise i.e overflow and underflow..

If the msb of multiply output and sum is 0 and combo add is 1 than it is overflow

than we will add 0 to msb of sum and rest bit will be made 0.

Similarly the case for underflow is made…

But above dont work for last multiplication, because for last multiply we need to add bias to multiply output

assign BiasAdd = bias + sum

always @(posedge clk)

begin

if(rst|outvalid)

sum <= 0;

else if((r_addr == numWeight) & muxValid_f)

begin

if(!bias[2*dataWidth-1] &!sum[2*dataWidth-1] & BiasAdd[2*dataWidth-1]) //If bias and sum are positive and after adding bias to sum, if sign bit becomes 1, saturate

begin

sum[2*dataWidth-1] <= 1'b0;

sum[2*dataWidth-2:0] <= {2*dataWidth-1{1'b1}};

end

else if(bias[2*dataWidth-1] & sum[2*dataWidth-1] & !BiasAdd[2*dataWidth-1]) //If bias and sum are negative and after addition if sign bit is 0, saturate

begin

sum[2*dataWidth-1] <= 1'b1;

sum[2*dataWidth-2:0] <= {2*dataWidth-1{1'b0}};

end

else

sum <= BiasAdd;

endThis sum now goes to activation function

Activation function supports 2 functions,one is RELU and other is sigmoid

generate

if(actType == "sigmoid")

begin:siginst

//Instantiation of ROM for sigmoid

Sig_ROM #(.inWidth(sigmoidSize),.dataWidth(dataWidth)) s1(

.clk(clk),

.x(sum[2*dataWidth-1-:sigmoidSize]), //send the sum value

.out(out)

);

end

else

begin:ReLUinst

ReLU #(.dataWidth(dataWidth),.weightIntWidth(weightIntWidth)) s1 (

.clk(clk),

.x(sum),

.out(out)

);

end

endgenerateIn next editions we will learn, even more deeper this was a overview of implementation of Neuron!

Stay tuned

what are steroids classified as

References:

https://md.inno3.fr/s/2mKf2E1FT

why are steroids important

References:

https://livebookmark.stream/story.php?title=stanozolol-winstrol-wirkung-einsatz-steroide

References:

Before and after anavar male

References:

https://buyandsellhair.com/author/wasteease82/

이는 일반 회사에는 근로에 대한 페이의 한계성이 존재하기 때문이라 할 수 있습니다.

Hi there, You have done an incredible job. I’ll certainly digg

it and personally suggest to my friends. I am confident they’ll be benefited from this site.

References:

Blood work before and after anavar hdl ldl

References:

https://yogaasanas.science/wiki/4_Week_Anavar_Before_and_After_Female

female anabolic steroids

References:

https://elearnportal.science/wiki/Dianabol_para_qu_sirve_resultados_y_efectos_secundarios

References:

Testosterone anavar before and after

References:

https://bookmarkzones.trade/story.php?title=anavar-before-and-after-effects-results-and-risks

which of the following is a correct description of an anabolic pathway?

References:

https://bookmarks4.men/story.php?title=the-10-best-testosterone-booster-supplements-for-men

deca durabolin tablets

References:

https://able2know.org/user/tightsnapkin9/

References:

Genting casino manchester

References:

https://gamesgrom.com/user/sugareight8/

each time i used to read smaller articles or reviews which also clear their

motive, and that is also happening with this piece of writing which I

am reading here.

Hi there! I know this is somewhat off-topic but I needed to ask.

Does managing a well-established blog like yours take a large amount of work?

I am completely new to running a blog but I do write in my journal every day.

I’d like to start a blog so I can easily share my own experience and views

online. Please let me know if you have any ideas or

tips for new aspiring blog owners. Thankyou!

References:

Anavar before and after pics reddit

References:

https://securityheaders.com/?q=https://candy96.fun

References:

Christophe claret 21 blackjack

References:

https://forum.issabel.org/u/archperu49

Hello, I enjoy reading through your article post. I wanted to write a little comment to support you.

You’re so cool! I do not think I’ve read through a single thing like this before.

So wonderful to discover another person with a few genuine thoughts on this subject

matter. Seriously.. many thanks for starting this up.

This web site is something that’s needed on the web,

someone with a little originality!

each time i used to read smaller articles or reviews that also

clear their motive, and that is also happening with this post which I am reading here.

This game looks amazing! The way it blends that old-school chicken crossing

concept with actual consequences is brilliant.

Count me in!

Okay, this sounds incredibly fun! Taking that nostalgic chicken crossing

gameplay and adding real risk? I’m totally down to try

it.

This is right up my alley! I’m loving the combo of

classic chicken crossing mechanics with genuine stakes involved.

Definitely want to check it out!

Whoa, this game seems awesome! The mix of that timeless chicken crossing feel

with real consequences has me hooked. I need

to play this!

This sounds like a blast! Combining that iconic chicken crossing gameplay with actual stakes?

Sign me up!

I’m so into this concept! The way it takes that classic chicken crossing vibe and adds legitimate

risk is genius. Really want to give it a go!

This game sounds ridiculously fun! That fusion of nostalgic

chicken crossing action with real-world stakes has me interested.

I’m ready to jump in!

Holy cow, this looks great! Merging that beloved chicken crossing style with tangible consequences?

I’ve gotta try this out!

Konu açıklayıcı.

Ben de Van escort araştırıyordum.

Sağ olun.

Also visit my webpage :: https://Wikime.co/User:VanceCruse00

Verde Casino bietet eine beeindruckende Auswahl an Spielen, die sowohl Einsteiger als auch erfahrene Spieler begeistern. Zudem bietet das Casino eine sichere Umgebung mit modernster Verschlüsselungstechnologie, um die Daten der Spieler zu schützen. Casino Verde bietet ein herausragendes Online-Glücksspiel Erlebnis, das sowohl Anfänger als auch erfahrene Spieler begeistert. Bis zu 1.200 € + 220 Freispiele für neue Spieler Einen Casinobonus kann man sich erst auszahlen lassen, nachdem man ihn umgesetzt hat.

Der Verde Casino Bonus bietet spannende Möglichkeiten für Neukunden und treue Spieler, das Spielvergnügen zu verlängern und zu bereichern, einschließlich Freispielen als Teil bestimmter Angebote. Verde Casino ist eine beliebte Online-Glücksspielplattform, die eine Vielzahl von Casinospielen anbietet. Genießen Sie lohnende Einzahlungsboni, exklusive Cashback-Angebote sowie den Nervenkitzel von Freispielen und Sonderaktionen. Bei Casino Verde bieten wir Ihnen ein aufregendes Spielerlebnis mit unserem großzügigen Willkommensbonus von bis zu 1.200 € plus 220 Freispielen. Neben klassischen Tischspielen, Live-Dealer-Spielen und Slots bietet Verde Casino eine Vielzahl weiterer spannender Spiele, die für Abwechslung und zusätzliche Gewinnchancen sorgen. Neben den beliebten Spielautomaten bietet Verde Casino eine erstklassige Auswahl an klassischen Tischspielen, die für ein authentisches Casino-Erlebnis sorgen. Trotz kleinerer Schwächen bietet Verde Casino eine hochwertige und sichere Glücksspielerfahrung, die vor allem durch ihre große Spielauswahl und moderne Technologie überzeugt.

References:

https://s3.amazonaws.com/onlinegamblingcasino/gamehunters%20doubleu%20casino.html

paypal casino online

References:

https://ipcollabs.com/companies/die-besten-online-casino-mit-paypal-im-test-2025/

online real casino paypal

References:

https://realestate.kctech.com.np/profile/sheena50y13919

online casino accepts paypal us

References:

https://jobscart.in/employer/top-online-casinos-rated/

paypal casinos online that accept

References:

https://spechrom.com:443/bbs/board.php?bo_table=service&wr_id=433745

When you reach the chosen amounts, you won’t be able to make a new deposit until the period elapses. As their names suggest, these tools let you set limits that prevent you from depositing and wagering more than you can afford. If your gambling habits become difficult to control, you can always use some of the tools to get back on track. If you feel an urge to gamble, set realistic limits, and once you reach them, call it a day. Setting win and loss limits is the best way to know when to stop gambling.

It’s clean, mobile-ready, and filled to the brim with thousands of exciting games from trusted software providers… but its biggest advantage is how quickly it pays out, especially with crypto. Not just in gameplay, but its cashouts, as well. From deep-sea diving adventures like 40 Sharks by Tornadogames to jackpot giants like Grab the Gold and Rich Piggies 2, there’s no shortage of themes, worlds, and payouts to explore.

References:

https://blackcoin.co/ufo9-casino-your-place-to-play-your-way/

Fena değil.

Van escort bayan bakarken gördüm.

İşe yaradı.

Alsso visit my web blog – daha fazla bilgi

online casino roulette paypal

References:

https://iqschool.net/employer/best-online-casinos-in-australia-top-casino-sites-for-2025/

Konu açıklayıcı.

Ben de escort Van araştırıyordum.

Sağ olun.

my web site https://Realtalk-Studio.com/masaj-uzmani-van-elit-escort-birgen/

paypal online casinos

References:

jobs.atlanticconcierge-gy.com

paypal online casino

References:

jobstaffs.com

online casino for us players paypal

References:

generaljob.gr

casinos online paypal

References:

https://exelentsmart.com/

Fena değil.

Van escort bakarken gördüm.

Faydalı.

my site; vip Escort

See the promotions page for current winspirit casino

bonus details and the exact wagering conditions.

If you have trouble with winspirit casino login, use the Forgot Password

option or contact support for help. This WinSpirit review covers games, bonuses, payments, app access and how to register and use the WinSpirit casino

login. Sun International casinos offer a wide range of casino slot machines including the

latest multi-line video slots and video poker games

on the gaming floor. Use the winspirit casino login to manage your profile and

track loyalty benefits. WinSpirit offers a broad game catalogue, flexible banking

including crypto, a mobile-first experience

and attractive promotions for Australian players.

The casino, set west of the city, is 56,000 square feet large.

The charming Tuscany village themed Montecasino is

located in Fourways, north of Johannesburg. It owns an unreasonable number of slot machines.

The Emerald casino Resort sits on the border of the

Vaal River, in Vanderbijlpark. ” section, where the “Install application for mobile” is located.

References:

https://blackcoin.co/free-bets-how-to-play-for-free-in-online-casino-and-poker-rooms/

Players who enjoy high RTP games and jackpot slots can benefit from these deals,

making their gaming sessions even more rewarding.

Staying up to date with the latest codes ensures that players maximize their gaming experience

and take advantage of every opportunity to boost their bankroll.

These deals allow players to claim free funds or free spins

without making a deposit, giving them the chance to win real money with zero financial risk.

For those who prefer to explore the casino without an initial investment, King

Johnnie Casino no deposit bonus promotions

offer an ideal solution.

With a strategic approach to bonus usage, players can significantly

enhance their gaming experience and increase their winning

potential. These offers provide players with additional spins

on selected slot games, increasing their chances of winning without

using their own funds. With an extensive

range of games, an exciting sports betting section, generous bonuses,

and superior customer service, King Johnnie Casino Australia continues to

set the standard for online gaming.

Yes, the King Johnnie app is 100% safe and secure.

To download the app, visit the App Store, Google Play Store,

or the King Johnnie website for direct installation instructions.

If you ever feel like gaming is becoming a problem, know that

help is always available.

References:

https://blackcoin.co/mr-o-the-best-crypto-casino/

Crown features two car parks in Melbourne, with over 5,000 parking spaces as well as valet

parking services, for your convenience. Simply log

in to the secure section of the Crown Rewards Member Hub online

and select ‘Password’. Just log in to

the secure section of the Crown Rewards Member Hub online and select

‘Account Details’. You can check your Crown Rewards balance online (once you have registered) or at any Voucher

Issuance Kiosk (VIK) located on the Casino floor.

You can make a purchase using a combination of points and other forms of payment like cash and

all major credit cards. Points can be used

to pay for almost everything at Crown Melbourne and

Crown Perth, like shopping, dining, gaming, hotel stays and much more.

We have a wide range of powers to regulate the Melbourne

Casino, including 24/7 onsite inspectors. The casino

operator (licence holder) must comply with all legal requirements.

The Melbourne Casino is the only casino in Victoria licensed under the Casino Control Act 1991(opens

in a new window). Crown Hotels has a variety of spaces

that can be tailored to your needs, all complemented by luxurious accommodation and state-of-the-art onsite facilities.

Do to need a space for a product launch? A Crown Wedding is a truly special

experience.

References:

https://blackcoin.co/velvet-spin-casino-a-comprehensive-review/

Whether you’re a seasoned player or a newcomer, finding the right best

online casino is crucial. Whether you’re new or experienced, this article covers all you need to know about

online casino Australia. It is important to emphasize that

this commission does not affect the bonuses or terms offered

to you as a player. On top of that, many Aussie casinos

now include features like leaderboards, player

chat rooms, and community tournaments, giving the whole experience a

competitive and social edge. Digital currencies like Bitcoin and Ethereum are gaining serious traction across Australian real money casinos.

For those who prefer strategy and skill, timeless favourites like blackjack, roulette, and baccarat deliver that authentic casino feel many Aussie players seek.

Most table games have better odds than slots, while slots

have better odds than most Keno games and scratch cards.

Most other table games, as well as slots and scratch cards, do not have a strategy elements.

Both require strategy to achieve optimal odds, so you have to learn strategy for

the best chance to win both games. All of them, though some games have better

odds than others.

I enjoy what you guys tend to be up too. This kind of clever

work and coverage! Keep up the awesome works guys I’ve incorporated you guys

to blogroll.

Sol Casino Bonus Code Freispiele

References:

https://online-spielhallen.de/ggbet-casino-promo-codes-ihr-schlussel-zu-mehr-spielvergnugen/

Im Casinos Austria Members Club genießt du in allen 12

Casinos in Österreich zahlreiche Vorteile und besondere Vergünstigungen. Bei jedem Besuch

wird auch ein Foto von dir angefertigt, das dem Identitäts-Check und

der Sicherheit dient. Bitte nimm dir bei deinem Erstbesuch etwas Zeit.

Das beliebte Kartenspiel können Sie im Casino Velden in der klassischen Variante zocken und versuchen, den Dealer bei

der Jagd auf die 21 zu schlagen. Hier nehmen Sie komfortabel an einem Touchscreen Platz und nehmen am echten Spiel teil, da Roulettekessel und Terminal miteinander verbunden sind.

Neben dem American Roulette mit echtem Croupier genießen Sie das Spiel der Könige im Casino Velden auch an Easy Roulette Terminals.

Fordern Sie Ihr Glück an mehr als 230 Geldspielautomaten, 8 American Roulettetischen, 11 Easy

Roulette Terminals, 4 Blackjack Tischen und 8 Pokertischen heraus.

2006 folgte ein weiterer Zubau zum Casino am Wörthersee und die Räumlichkeiten stiegen auf eine Quadratmeter-Zahl von 1.150.

Eingebettet in die Kärntner Bergwelt begeistert Velden mit türkisblauem Wasser,

mediterranem Flair und einem Hauch von Luxus. Velden am Wörthersee zeigt sich im Sommer als exklusiver Badeort

und lebendiger Treffpunkt für Genießer, Sportbegeisterte und Nachtschwärmer.

Erstmals wird das € 2.500 + 250 Main Event der Poker EM mit zwei Starttagen gespielt

References:

https://online-spielhallen.de/legiano-casino-2025-test-login-zahlungen/

Wow, marvelous blog structure! How lengthy have you been running

a blog for? you make blogging glance easy. The overall glance

of your site is fantastic, let alone the content!

princess casino freispiele ohne einzahlung

References:

https://online-spielhallen.de/ihr-ultimativer-leitfaden-zu-sofort-casino-bonus-codes-wissenswertes-fur-spieler/

Damit steht dem Spielvergnügen im Online Casino Deutschland mit zusätzlichen gratis Gewinnchancen aufgrund der Freispiele nichts mehr im Wege.

Wer in Deutschland ein Online Casino legal anbietet, lässt seine RNGs von unabhängigen Prüflaboren testen,

um eine Lizenz zu erhalten. Wir haben schon mehrmals weiter oben erklärt, dass

legale Online Casinos ein vielfältiges Spielangebot bieten müssen.

Legal spielen kann man also bei vielen Casinoseiten, in meiner Top habe ich aber die besten Online

Casinos Deutschlands aufgelistet, welche viele zufriedene Kunden haben. Die neue deutschlandweite Regulierung sorgt dafür, dass lizenzierten Online Spielhallen höchste Standards bei der

Sicherheit für Spieler und ihre Daten einhalten. Dabei limitieren sich die

Anbieter nicht unbedingt nur selbst, auch regulatorische Vorschriften wie auch die Limits einzelner Zahlungsdienstleister spielen da eine

Rolle. Stattdessen kann man einfach auf der mobilen Webseite

des Casinos spielen. Das Casino Baden Baden gilt als die vornehmste aller deutschen Spielbanken, wobei andere traditionsreiche Casinos wie in Bad Homburg in dem

kaum nachstehen und ebenfalls einen Besuch wert sind.

Sie bieten dem Spieler Sicherheit, Fairness, geben keine Daten weiter und zusätzlich wird das Geld

der Spieler sicher bewacht wird. Sie ist die einzige Lizenz für Glücksspiele in Deutschland und erfüllt

alle Anforderungen, die ein Online Casino erfüllen muss, um in Deutschland agieren zu können. Innerhalb Schleswig-Holsteins müssen Sie nach

Anbietern mit deutscher Casino Lizenz Ausschau halten. Manch eine Webseite bietet Ihnen ebenso einen telefonischen Kundenservice, den wir ebenfalls auf seine Freundlichkeit hin testen, bevor wir Ihnen das

Casino empfehlen.

References:

https://online-spielhallen.de/cosmo-casino-freispiele-ihr-weg-zu-gewinnen-und-spas/

We absolutely love your blog and find most of your post’s to be exactly I’m looking for.

Does one offer guest writers to write content in your case?

I wouldn’t mind writing a post or elaborating on a lot of the subjects you write concerning here.

Again, awesome web site!